At Picnic, we do our best to ensure that the information we provide to our customers is relevant and up to date. In order to do so, we provide most of it through our backend systems. This allows us to have real-time control over the information and any changes that we may want to apply over time.

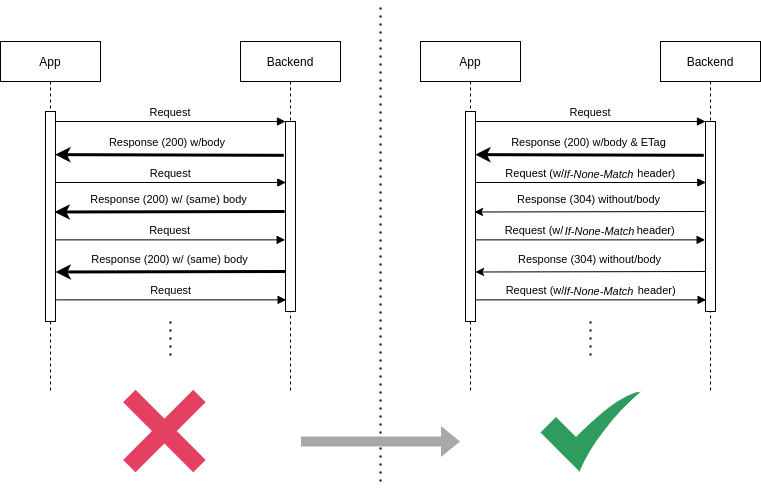

Now, you may be thinking, what about all the information that doesn’t change throughout days, weeks, or even months? Do you really need to send it over the wire, every time, to every customer? Isn’t this unnecessary, overkill, and an inefficient use of bandwidth? Yes, it definitely is! A significant amount of the requests made by our app result in the same response for the customer. Sending that response over the wire every time is a waste of bandwidth.

Caching responses in mobile applications might help, but it could mean that customers see outdated information. However, nothing stops us from still querying our backend systems every time, but avoiding transferring information to clients if it matches the information they already have cached.

HTTP caching & entity tags

Fortunately, these are issues that the HTTP protocol allows us to address through a mechanism called ETag (entity tag) caching. And so, last year, we embarked on a journey to discover how we can get the most out of ETag caching on some of our highest traffic requests. Our goal? To avoid transferring the same data over and over when customers already have it on their devices.

The first choice we had to make had to do with what we intended our cache-control directive to be. Cache-control is an HTTP header used to specify caching policies to be respected by both servers and clients. The HTTP protocol provides us with many different directives to deal with cached information. Up until now, all our requests had a no-store directive. This way, we ensured our clients did not store any information received. In other words, we opted to always transfer up-to-date information over-optimizing for bandwidth.

However, when dealing with our catalog and providing customers with information on products we have in our assortment, this type of information can be safely stored on their devices without representing any sort of security risk for them. We chose these particular requests as they often have the same response body, considering that our assortment changes at a slower rate than our customers browse it. That said, we still want to force our clients’ devices to re-validate their cached responses. This way, the customer never sees a cached response when more up-to-date information is available in the backend. To achieve this, we applied the no-cache directive, forcing the client’s devices to never use the cached response to satisfy a subsequent request, without first successfully re-validating it with the backend.

Sounds good, but how exactly does it work?

Having the cache-control directive with the right value is necessary, but not enough. To make this work we need to have an identifier for each resource that we can use to assess whether the client has the most up-to-date resource version. This is where entity tags come in. The ETag is a unique identifier placed in the response header “ETag”, whose value identifies the current version of the requested resource. By providing our clients with this entity tag when sending them the resource, we enable our clients to make conditional requests. This is a feature broadly supported by HTTP clients (meaning our apps did not need to be updated) that start making conditional requests once they receive the correct response headers. In this case, once they receive the cache-control directive as no-cache, and an entity tag in the ETag header, they will start making conditional requests to the origin server. More specifically, the next request made (if the request is to the same URL) will be a conditional one. This means it has as a part of its request headers the correct cache-control directive, as well as the conditional request header If-None-Match containing the last received entity tag value to be verified by the backend.

Once received by the backend, and before returning the response, the server will evaluate if the new response’s entity tag matches the one provided in the request header. If so, the response code will be changed to 304 NOT MODIFIED, with an empty response body, saving precious bandwidth.

Having studied the functionality of HTTP caching and entity tags, we put our newly found knowledge to work, and released to our customers a new version of our services with entity tags applied, saving bandwidth and possibly even making our customers’ mobile data bills slightly lower.

A bump in the road as customers are logged out

For about a month, our fresh caching implementation was working perfectly. However, after this month, we started noticing some weird behavior, with customers being consistently logged out. At first, it wasn’t even clear to us that this issue was related to our new caching mechanism. However, after some detective work, we realized that even though our customers were not being logged out on cached requests, they were always being logged out on the subsequent request, after one they had cached. Moreover, the request triggering this problem was not the same for every customer.

At this point, even though the clear culprit of our issue had not been found, it was clear to us that it was indeed related to the configuration of caching in our client’s devices. Therefore, we immediately disabled ETags, restoring normal behavior for our customers. Next, we got to work, digging into why our customers started being logged out in the first place.

Are headers included?

It wasn’t easy, but eventually we realized how having enabled ETags in our systems had the delayed effect of our customers being logged out. Request headers are essential for many reasons, one of which is communicating authentication tokens between the client and backend. In our case, our customers’ authentication tokens can be refreshed for up to a month. These refreshed tokens are added in a response header to the request if the token provided in the request needs refreshing.

It was only at this point that we realized that different implementations of the caching protocol may not necessarily match each other, even though both respect the defined HTTP protocol. To get to the root cause of our problem, we had to dive deeper into not only the backend server’s implementation of ETags, but also into the client’s implementation of the HTTP protocol. It was around this moment we that completely understood what was causing this erratic behavior, a slight difference between the server’s and the client’s implementations:

- The client’s implementations of the HTTP protocol also caches the HTTP response headers;

- The backend implementation, on the other hand, based on the Spring implementation of ETag filters, considers only the response body for the entity tag calculation.

This means that in the client, for a 304 NOT MODIFIED response, not only is the cached response body considered, but also the response headers from the cached response (even though the headers received in the 304 response override the cached ones).

If we consider a situation in which an authentication token is sent as a response header (once refreshing the token is required) then this header may be cached. Later on, once the same request is repeated (and the response is a 304 — NOT MODIFIED), unless there’s a new token as a header, the cached one will be considered by the client. This was the problem, as we only expected this token to be part of a response when it is the first response to this client after being issued!

Long story short, ETags became the reason why some clients started considering expired tokens as fresh ones, resulting in our backend services considering the clients unauthenticated.

Takeaways

At this point, we fully understood the problem at hand. The next steps were to devise a solution for the problem and, hopefully, make our caching implementation more robust and controllable, providing us with a better control of our caching mechanism from the backend and allowing us to quickly and effectively tackle any similar issue that might arise in the future.

Vary header

The most important first step is to ensure that this particular situation can no longer occur. To do so, the name of the header carrying the authentication token was added into the Vary header’s value. But what exactly is the effect of this?

By adding this particular header into the Vary header value, we are informing our cache implementations that they must not use this response to satisfy a later request if this request’s header value doesn’t match. In doing so, every time our clients receive a new token as part of a response header, subsequent requests with cached responses, but with different token header values, won’t attempt to use the cached response, instead requesting the response from the origin server unconditionally and thereby solving this issue for good.

Backend controlled cache versioning

Even though we had already found a solution for this particular problem, we should always strive to make our systems more robust, so that the handling of similar issues in the future is as smooth as possible. To this end, we also applied some changes of our own to the BE caching mechanism.

From the start, we calculated the entity tag value by performing a calculation over just the response body. To give us a bit more of control over this, we wanted to achieve a state where we could, if we chose to, reset all cached responses from the BE with little effort.

To do so, we simply appended a salted value to this calculation, that we can easily change through configuration from our BE systems, allowing us to reset all cached responses on clients in under 30 minutes, without any app update required or file deletion in our client’s devices.

Results

The request in question can have very different response sizes depending on the request and the current assortment. We estimate the average response size to be around 30Kb. Based on this assumption, on the day from which we extracted the above graphs, we had 1.120.093 cache hits, which means that, for each day, we save an estimated 32GB of bandwidth on average.

Conclusion

It’s easy to simply not use any sort of caching mechanism; to always send the information we want over the wire, not worrying about the extra complexity on our client-server communications. But ‘easy’ is not what we strive for! More robust, more scalable, and more efficient client-server communication is the goal. And if we’ve already sent this information to the client, why should we send it again? There is no point in sending the same letter to the same person twice, right?

With each day and improvement, we work to make the service we provide to our customers better. By enabling caching and conditional requests, we take a step in that direction!

At Picnic, we do almost everything in house. Want to help us revolutionize grocery shopping? Come join our team.