The story of building an automated warehouse without having one — Part I

A wintery day

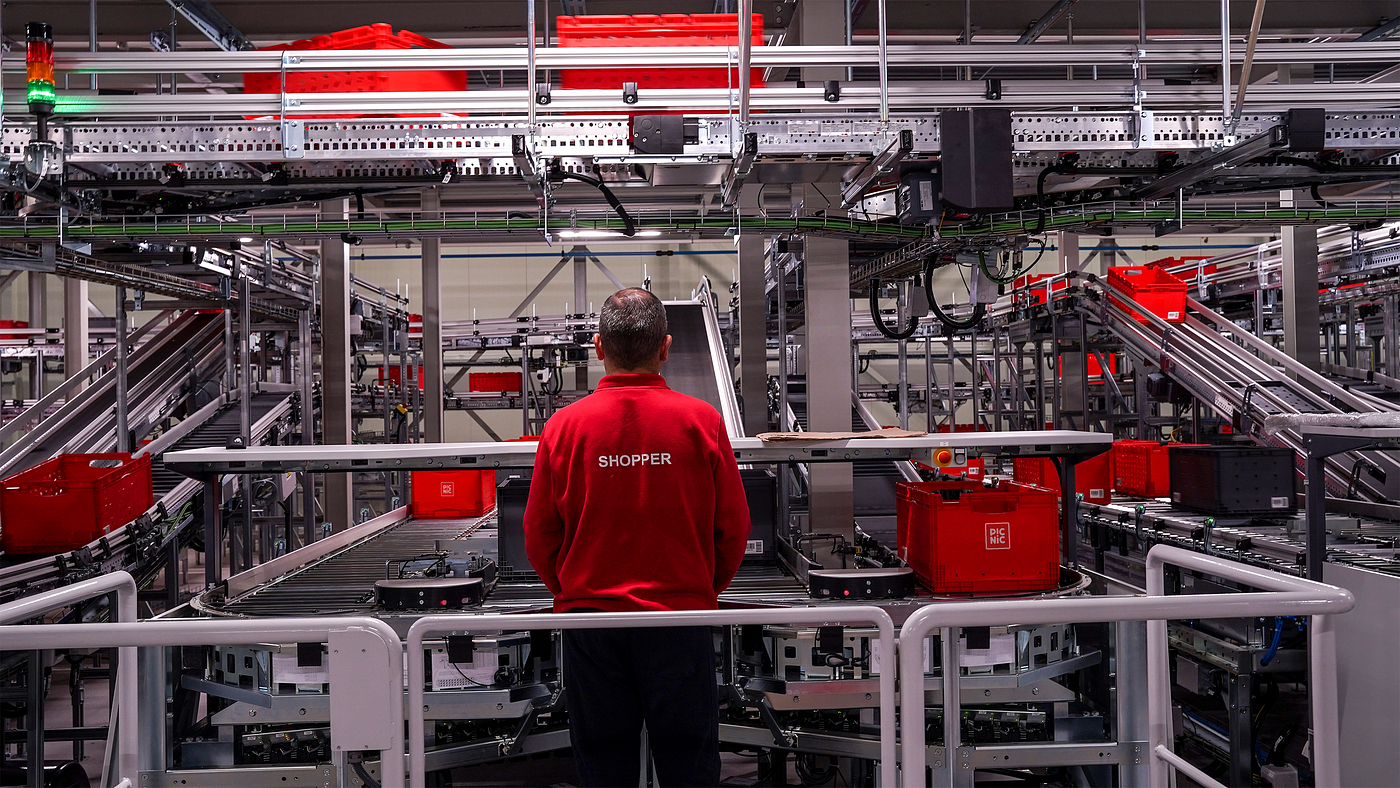

It is a cold, dark, wintery day. You are at your desk, it is your first week as a programmer in a new job. The software you are tasked to produce should in several months control the hundreds of robots, conveyor belts, and user interfaces of the largest automated grocery warehouse in the country. It is a crucial bet: succeeding means unlocking critical growth, failing means the strategic plans your company built over years could take a severe hit. You are made responsible for designing the testing strategy of that software.

There is more. While you work on the software, the warehouse is an empty building. An army of technicians will start assembling the hardware in weeks: stations, screens, servers, PLCs. It will take them several months. The software your application will communicate with is not built either. All you have is an API specification.

Before we start, I would like you -the reader- to pause and think for a moment: what would you do? How would you ensure your team built the right thing almost two years before the whole warehouse -with its people, hardware, and software- went live?

A similar version of this situation is where our team stood approximately in March 2020. Join us in this series of articles backward in time from the day we asked ourselves the same question, until the present day, where our automated fulfillment center is our largest in volume.

In this blog post, we will see how caring about the end result, and not stopping on the obvious made us go a long way. Let’s start!

A flammable cocktail

Building complex systems is hard. More so, when its functioning depends on the harmonious interaction between many parts, built by different teams in multiple countries. Even more so, when deadlines are tight. It then becomes a flammable cocktail. Every part must be ready on time and function correctly with the others.

There were many moving parts indeed. Before our team was even formed, the initial design of the warehouse was conceived by Picnic’s Automation team. At the time we started writing the first lines of the Warehouse Control System (WCS) code, the hardware components were being shipped to Utrecht. A different backend team outside of Picnic tweaked WCS’ cousin, the Transport System, which would act as an intermediary between the high-level instructions of our software and the hardware. Simultaneously, Picnic’s designers were studying how to make the system usable for our Operations team, and several other backend teams were making sure they could support this new type of operation internally. And that is outside of the team, but inside we had to deal with a lot of complexity as well! Our code had the same issue internally, as dozens of flows had to be developed in parallel by different people on the team.

We had to be quick. Everyone involved had a big “red cross” on the calendar: we had to be ready at the beginning of 2022. That meant a bit less than two years to build, test, and commission everything. Interfaces are one of the biggest risks; if we were to make it, we knew our software needed to work flawlessly before commissioning, where all the parts would be tested together for the first time. How could we learn as much as we could before we could test the whole?

More than automation

Manual testing was out of the question. Starting from scratch, we needed quick feedback on the features we built; a lot of testing. It would have taken too much time -and frustration- to do it manually. We knew we had to automate things.

Automation by itself was not good enough: the scenarios we had to bring under test were too complex. Let’s illustrate this with one of the first -and simplest- features we built: introducing and storing a pallet of stock (such as apples) in the warehouse. Our backend needs to do 23 calls to other systems for every single item added.

The obvious way of testing this would have been to write assertions for each action our software performed in response to an input. To test our single apple pallet introduction, this means effectively writing 23 times a variation of this statement:

"">Given precondition A

When event B happens

Then WCS performs action CGiven precondition D

When event E happens

Then WCS performs action F(and so on)

This is not scalable, and very error-prone. Our system, heavy on business logic, has many different flows and edge cases, each requiring a slight change in this list. Additionally, the syntax does not tell us much about the why. How could a team member working in another part of the code understand if a given action performed was “right”? What about our business stakeholders? This approach was far from ideal.

Fake it until you make it

Instead, our team had an idea. Rather than thinking about each component in isolation, we framed the situation as: how can we best ensure that, when ready, all the moving parts work as expected? We cared more about the end result, rather than the incremental impact our product had.

The follow-up question was: Is there really no way we can test the system as a whole, without the other components? It felt as if we tried to know the speed of a car with an unfinished steering wheel in our hands. Then an idea came: we would simulate all the missing parts. We would fake the warehouse until it was made.

Our goal was to think in complete business features, rather than single actions. Business features are easier to understand, and they are limited complexity-wise. We also believed it would be a great learning opportunity, as the code would behave as it is meant to be from the beginning; the smoothest transition we could think of to commissioning. That was our dream: to at some point, with the snap of a finger, be able to replace the simulation with the real components without needing our software to notice the change.

Summarized, this meant that rather than building the best steering wheel we could in isolation, we aimed to build the fastest car (in a simulation).

But before reaching high speeds, we knew that first, we had to be able to run at 30 km/h.

Running at 30 km/h

What does “running at 30 km/h” mean for an automated warehouse? Let’s borrow our stock introduction feature from before. It looks something like this:

"">Given operator X will introduce products in <station>

And <station> contains 1 apple

When operator X starts decanting

Then <warehouse storage> eventually contains 1 apple

Note: the snipped above is an actual executable test using gherkin syntax.

Simple, understandable, and business-relatable. No matter the implementation details, our team knew that if the apple did not end up in storage, something went terribly wrong. We built as many tests as there were business features; it was scalable. Additionally, it also enabled our QA team to start thinking of test cases right after business requirements were clear -what should our software do-, without needing to wait until the code was written.

MVP thinking

In hindsight, building a simulation was a key decision. It bootstrapped the learning process of our team and enabled us to deliver complete features right from the start of the development process. This is MVP thinking: building some minimal version of a complete functionality to get feedback as early as possible to maximize learnings, as opposed to focusing on incremental steps. We tested the speed of a minimal version of our car, instead of building one of the four wheels. Remember: you still cannot test the speed of the car with steering and four wheels!

MVP thinking in this case meant that rather than aiming to “support messages of type X”, –building one of the wheels– we aimed to “support picking in a simulated environment” –running at 30km/h-, which was the closest we could get to the real feature at the time. It was a challenging setup, but it allowed us to learn much in advance the real behavior of our software, enabling us to catch bugs as early as we could. It also motivated us, as we delivered complete functionalities, and it was really easy for the business to understand the progress in tangible terms. All in all, we took ownership of the end result, and that turbocharged our learning.

The missing pieces

This is much easier said than done. Achieving this was not a trivial task. It required quite some additional work in the planning. For example, to make the “apple in storage” feature work, we needed to simulate:

- An operator at a decant station ready to introduce items

- Stock in the decant station for the operator to pick

- A network of conveyors matching the actual layout of the warehouse

- A storage system matching the shuttle-based one we use

- A “software layer” matching the real API

Was this extra work worth it? And, how to simulate all this?

We will answer these questions in Part II of this series, coming next week, where we will dive deeper into how we built it, and why some parts were harder than we initially thought. See you there!