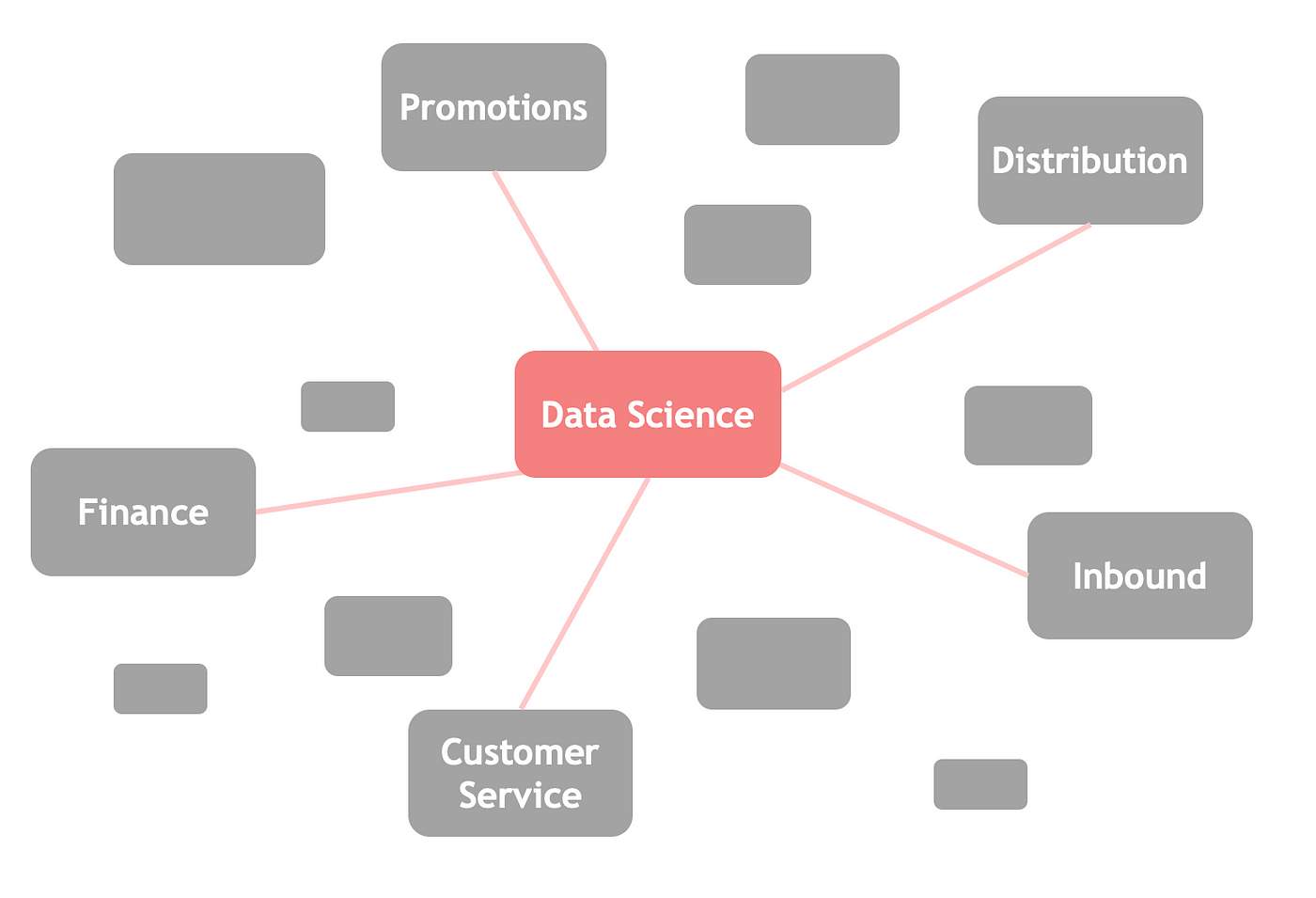

Here at Picnic, we love data. Over the last years, Picnic has grown into a data-driven online supermarket that is active in three countries. By leveraging data and algorithms, we have been able to support the company’s growth while maintaining high service levels. Besides numerous demand forecasting models, we have for example built machine learning models to improve our customer service and increase the efficiency of our trips. The number of machine learning (ML) solutions within Picnic has been steadily growing and these solutions have been bringing a lot of business value. That said, this of course doesn’t mean that we are done. How can we bring our data science game to the next level? How can we go from tens of models to hundreds of models in order to make an impact in every part of the business? In this blog we will dive into our plans to further scale data science at Picnic.

Data Science at Picnic

We have been building and maintaining our ML systems as a central Data Science team with a lot of emphasis on software engineering skills. So far, this has been working great for Picnic. We skipped the Jupyter Notebook phase. From the start, we aligned our tech stack with the rest of Picnic’s Tech team and adopted the same DevOps practices. On top of that, the Data Science team is able to tackle the entire lifecycle from ideation and scoping to packaging the model and deploying it on Kubernetes. We don’t train models and throw them over a fence, but instead, we foster a strong “you train it, you own it”-mentality.

We have been able to build ML solutions that integrate well with other systems and processes within Picnic, while simultaneously standardizing some of the common components in an ML system as we go. For example, we have built an in-house model registry to simplify model storage and retrieval, and a prediction archive to standardize the logging of predictions for long-term storage and analysis.

However, in order to continue supporting the growth of the company, we also recognize we have to become better at scaling machine learning solutions in the coming few years. The number of use-cases for ML increase at a faster rate than that we can grow our team. Additionally, the more we build, the more time we spend on maintaining existing solutions. Hence, we need to increase the number of models a single data scientist can build and manage, as well as increase the number of data science practitioners in our company.

MLOps to the rescue

To further scale and accelerate data science within Picnic, we have initiated a focused effort on Machine Learning Operations (MLOps). MLOps is the set of practices with the goal of reliably building, testing, deploying and maintaining machine learning solutions. The benefit of MLOps is that it smoothens and shortens the machine learning lifecycle. For example, through automating model retraining and deployment, creating reproducible experiments, managing and tracking model artifacts, and more.

There is quite a bit of overlap between DevOps and MLOps, as many blog posts already point out. Many of these practices and processes are already adopted by Picnic’s Data Science team. Yet, there are definitely some unique aspects to machine learning solutions that are not covered yet by our current tooling and infrastructure. For example, actionable alerting on model drift and easy model deployment on GPUs.

As mentioned earlier, we also want to increase the number of data science practitioners. In order to enable more people at Picnic to build high quality ML systems, we need to solidify MLOps practices more explicitly through standardization. We also require more automation in the tooling and infrastructure used across the machine learning lifecycle. Last, but certainly not least, the complexities of the wide array of technologies used when building an ML solution should be abstracted away.

Thus, Picnic’s vision for its Data Science Platform was born. In the following sections, we will dive deeper into our vision for the platform and the principles that we are keeping in mind while building it.

Picnic’s Data Science Platform

The vision of Picnic’s Data Science Platform (DSP) is that everyone at Picnic can deliver industry-leading machine learning systems.

The users of the DSP are not just the central Data Science team. Business analysts and developers from other teams should just as easily be able to use the platform and collaborate on models in order to achieve the best solution possible.

More concretely, the DSP should provide a collection of tooling, infrastructure, and best practices to scale industry-leading ML systems at Picnic. It supports its users to reduce the time-to-market and increase the quality of their systems, while eliminating complexity.

When designing a platform that supports Picnic’s ML systems of the future, we keep in mind a set of core principles. The following eight principles align with our vision and are core to our platform.

Collaboration

Collaboration with business and tech teams should be seamless, such that users can leverage domain knowledge and drive adoption. By promoting and facilitating collaboration, the platform supports its systems to build more accurate models and to quickly make actual business impact.

Versioning

All artifacts of an ML system should be versioned. Artifacts are anything that is an input or output of the system, such as data, configurations, models, etc. By baking in versioning for all artifacts, the platform enables users to fully control what is used in production.

Testing

Each part of an ML system should be tested. Tests should not only cover the training or inference code, but also the data and the model. By giving users the tools and practices to test properly, users can have the confidence that their changes work as intended before deployment.

Reproducibility

Data, models and code should be easily reproducible. By embedding reproducibility across the machine learning lifecycle, the platform enables users to translate experimental results to production performance.

Continuity

ML systems should be automatically and continuously updated with new data, models, and code. By automating the mundane steps, the platform allows users to focus on their craft and minimize errors caused by manual work.

Scalability

ML systems should scale accordingly to their load and business value. By abstracting away complexity, the platform helps users to build systems that are future-proof, without them having to be experts in software engineering or infrastructure.

Monitoring

Each part of an ML system should be extensively monitored. By making data, models and deployments transparent, the platform gives users actionable insights and alerts to exercise operational excellence.

Governance

The platform should strive for transparency and accountability of ML systems. Through best practices and sensible defaults, the platform promotes users to build only fair and ethical models.

Working towards our vision

We will tackle the building of the Data Science Platform with a product-oriented approach. We recognize that MLOps is a field that is still very much in flux. Start-ups trying to solve some new challenge within the machine learning life cycle are constantly popping up. At the same time, none of the big machine learning platform offerings is able to cater to all use cases and cover all challenges you might encounter (AI Infrastructure Alliance, 2022). In other words, it is hard to find something that meets all your requirements (not just on paper, but in practice) and doesn’t become outdated within a year.

We will therefore look to iteratively expand our existing set of tooling by selecting per component the best of breed solution. Whether they are vendor solutions or built in-house. By taking a more modular approach, we aim to be independent of any platform and be flexible enough to swap out components in this rapidly changing field. This also allows us to first focus on the challenges considered most important by our users and innovate quickly.

Sounds interesting? Make sure to follow the Picnic Engineering blog and keep your eyes open for new blog posts! If you are interested in helping to build the most data-driven modern milkman, head over to https://picnic.app/careers/ and take a look at our vacancies!