Delivering the right events at low latency and with a high volume is critical to Picnic’s system architecture. In our previous blog, Dima Kalashnikov explained how we configure our Internal services pipeline in the Analytics Platform. In this post, we will explain how our team automates the creation of new data pipeline deployments.

The step towards automation was an important improvement for us, as the previous setup was manual, slow, and error-prone. Now, we can have a pipeline ready in minutes. This was not as easy as it sounds, as you will see in this post.

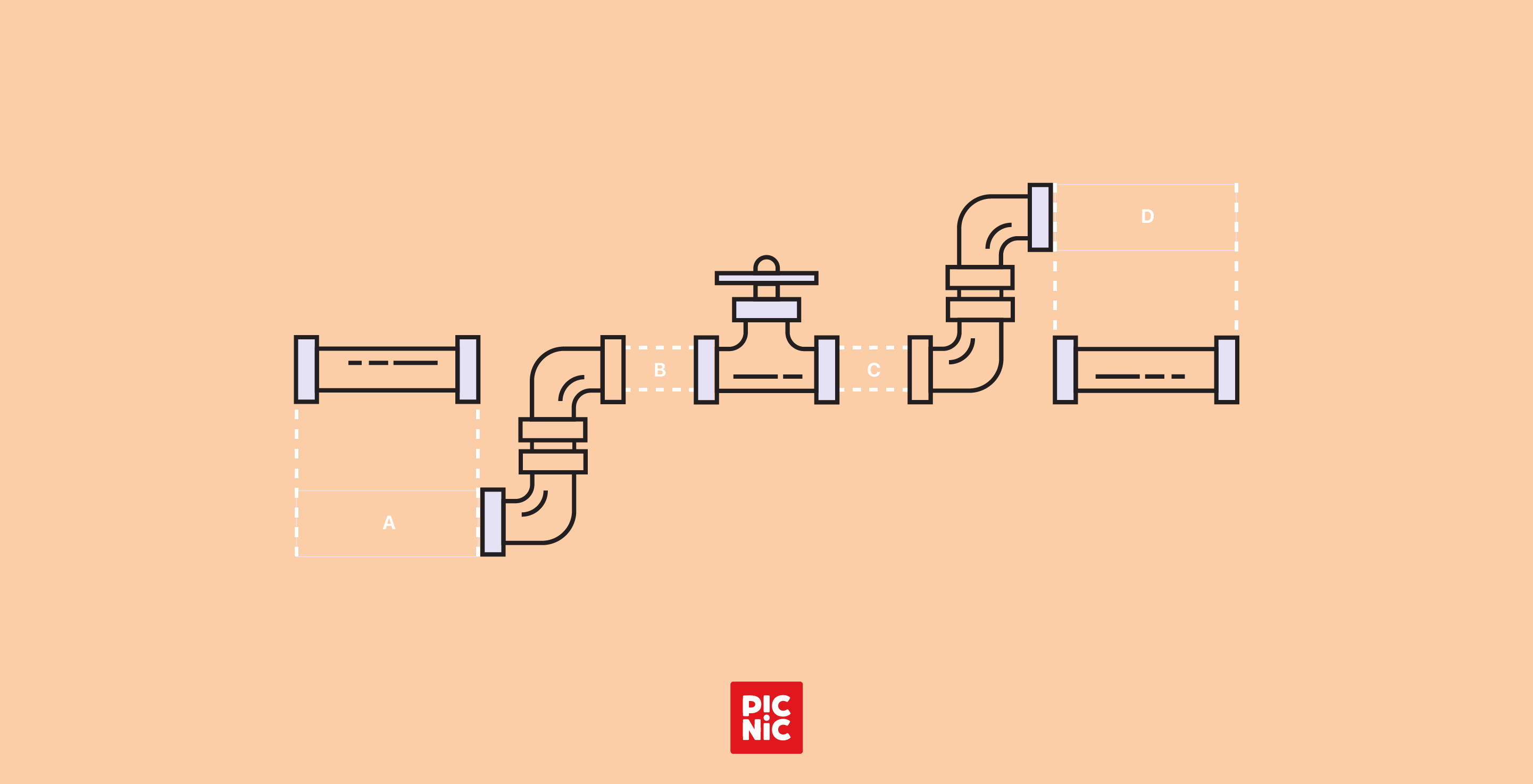

There are multiple components involved in the successful deployment of a pipeline. There’s a problem with a setup like this, consisting of multiple components. These components are created in various steps, hence we need to ensure that the components are created atomically and the pipeline remains in a consistent state — the pipeline should either fail to create entirely or completely succeed. We cannot have a situation where one or more of the components are not created successfully.

Question is: how do we ensure the atomicity of the pipeline deployment? We’ve decided to implement the Saga pattern to solve this issue!

What is the Saga pattern?

The Saga pattern is based on the idea of a “saga”: a long story of a hero’s journey. In this case, our hero wants to deploy a new pipeline in a consistent manner. The steps of this story are actually local transactions distributed across the various components, all coordinated to achieve our larger goal. A true heroic story indeed! Each local transaction is designed to be reversible so that if something goes wrong during the process, the system can roll back any changes that have been made.

The key benefit of the Saga pattern is that it allows for the orchestrating system to maintain consistency and integrity even in the face of failures in other components. If one component fails during a transaction, the Saga pattern can be used to roll back the changes that have been made in other components so far.

How does it work?

There are two main principles supporting the Saga pattern:

- Orchestration: the process of coordinating the actions of multiple components in order to achieve a larger goal.

- Compensation: the process of rolling back any changes that have been made during a transaction if something goes wrong.

How did we implement the Saga pattern?

To implement a Saga pattern, we need to define the steps in the workflow as independent transactions and the compensation logic for each step in the event of failure. The Orchestrator is responsible for coordinating the execution of these transactions and handling any compensation actions. We updated our existing service handling pipeline automation with this new Saga-based orchestration engine.

Design:

- The PipelineProcessor validates and processes the incoming request and submits it to the Orchestrator and finally communicates the result to the client.

- The Orchestrator is the core component that coordinates all the transactions across all the components. It takes a predefined set of pipeline steps and executes them in the given order.

- The Orchestrator receives the request and begins executing the transaction by sending a request to the first step in the workflow.

- The first step processes the request and sends a response to the Orchestrator in a Kafka topic and the Orchestrator listens to these events.

- The Orchestrator acts on the event and executes the next step if it’s a “SUCCESSFUL” event and if it’s a “FAILED” event, a “ROLLBACK” is triggered, resulting in a cascading rollback where all the executed pipeline steps will be rolled back.

- If all the steps in the workflow execute successfully, the Orchestrator sends a final response to the client to indicate that the transaction completed successfully.

- If one of the steps fails, the Orchestrator executes the compensating transactions (rollback) to undo the changes made by the previous steps, and sends a failed response to the client.

- There are some cases where the rollback could also fail. If a rollback fails, a “ROLLBACK_FAILED” status is sent to the client.

- When the rollback also fails, the engineers are alerted via slack channels for a manual recovery or cleanup.

Wrapping up:

In this post, we have explained how we automate our pipeline deployments at Picnic. We prevented a lot of manual errors with this setup and the time taken to deploy a pipeline is now in seconds from days. This automation helps us to create and rollback pipelines without manual intervention.

We also considered Apache Airflow for our pipeline automation but we chose to implement this minimalistic setup since our use case is very simple at the moment and we did not want to maintain another big service like Apache Airflow.

This setup also has a drawback as it does not offer “read isolation¹” — for example, we could see the RabbitMQ queues getting created but after a short while you could see them deleted due to a compensation transaction. Another drawback at the moment is the pipeline steps are provided as a list but in the future we would like to supply these steps in the form of “Directed Acyclic Graph” DSLs.

[1]: read isolation: This isolation level guarantees that any data read is committed at the moment it is read.

Erdem Erbas

Erdem Erbas